It has been an exciting few weeks for the XA Secure team. We formally joined Hortonworks on 5/15 and have received a warm welcome from our new peers. Even more exciting are the numerous discussions we have had with current customers and prospects on how we can bring together a comprehensive and holistic security capabilities to HDP. We now begin the journey to incubate our XA Secure functionality as a completely open source project governed by the Apache Software Foundation.

With all the excitement of the acquisition behind us, let’s take a deeper look at the road ahead as we work to further define the definition of completely open source security for Hadoop. We also encourage you to register for our webinar next Tuesday where we will outline these new features.

Holistic security approach: Why is it important in Hadoop?

With the introduction of YARN in HDP 2.0, Hadoop now extends beyond it’s batch roots and has moved into the mainstream of daily data processing and analytics with real-time, online, interactive, and batch applications. Hadoop is more mission ciritcal than ever and with this comes stringent enterprise requirements, especially for security.

The traditional security controls, which worked well for silo-ed application and data storage marts, are no longer adequate in the land of data lakes. Some of the challenges include:

- Data, which was once well protected within singular environments, now suddenly co-exists with multiple sets of data and business processes

- Within a data lake, access to the cluster may be provided for many, however we must still protect access to singular data sets or subsets of data within the overall system.

- A lake also allows multiple applications to access and work on a single set of data (two apps on a single operational store or stores). Walls must be constructed to protect the data from misuse between applications.

The answers to these challenges cannot be solved by solving each individual issue. A comprehensive approach is needed and ultimately any security solution must address this key question: How do we secure Hadoop while keeping its open architecture and scalability to running any use case the enterprise needs?

Together, Hortonworks and XA Secure share a belief to provide a comprehensive, holistic security approach that can answer this question. Together, we now will execute on this vision, completely in the open.

What does comprehensive security mean for Hadoop?

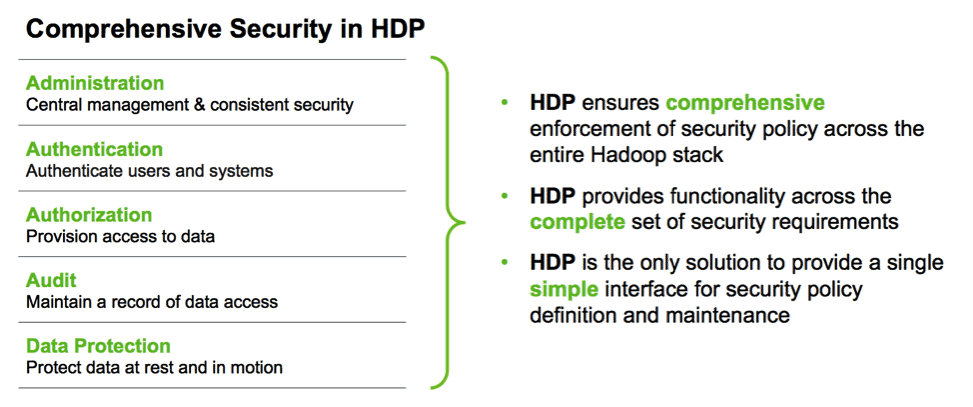

A Chief Security Officer’s (CSO) or Director of IT Security demands not only holistic coverage for security but also that it is easy to administer and enforce consistent policy across all data no matter where it is stored and processed.

A Chief Security Officer’s (CSO) or Director of IT Security demands not only holistic coverage for security but also that it is easy to administer and enforce consistent policy across all data no matter where it is stored and processed.

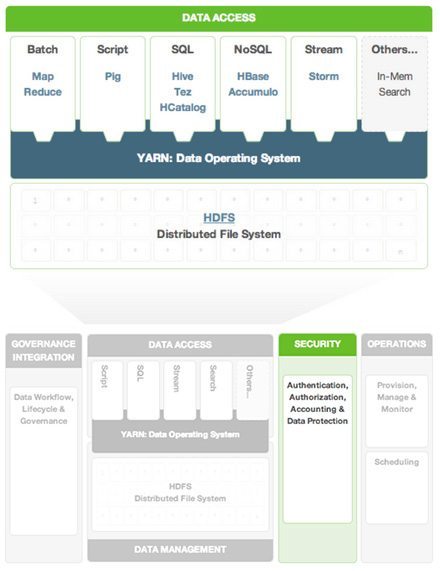

Apache Hadoop originated as a simple project at the Apache Software Foundation (ASF) to manage and access data, and included just two components: the Hadoop Distributed File System (HDFS); and MapReduce, a processing framework for data stored in HDFS. As Hadoop matured so too did the business cases built around it and new processing and access engines were required for not only batch, but interactive, real-time and streaming use cases.

A comprehensive and integrated framework for securing data irrespective of how the data is stored and accessed is needed. Enterprises may adopt any use case (batch, real time, interactive), but data should be secured through the same standards, and security should be administered centrally and in one place.

This belief is the foundation of HDP Security and we endeavor to continually develop and execute on our security roadmap with this vision—of looking top down at the entire Hadoop data platform.

HDP Security, now with XA Secure

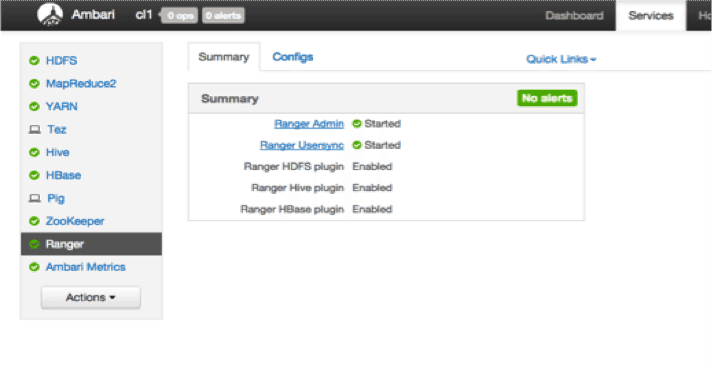

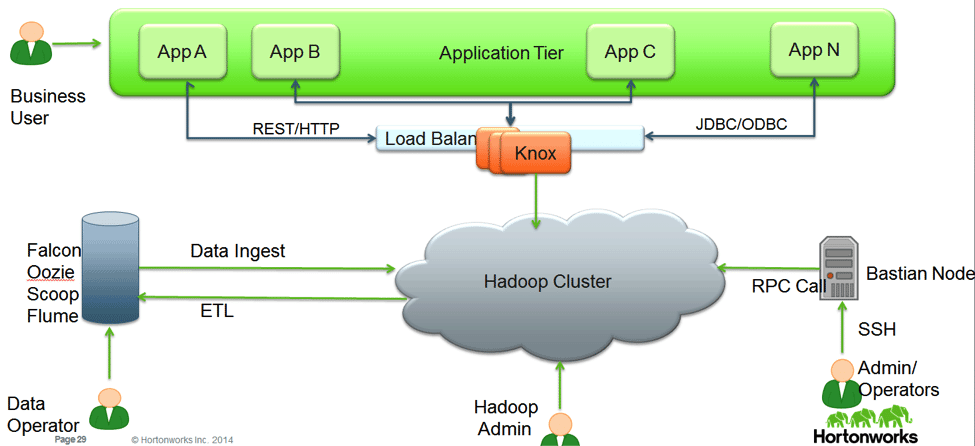

Hortonworks Data Platform provides a centralized approach to security management which allows you to define and deploy consistent security policy throughout the data platform. With the addition of XA Secure, It allows you to easily create and administer a central security policy while coordinating consistent enforcement across all Hadoop applications.

HDP provides a comprehensive set of critical features for authentication, authorization, audit and data protection so that you can apply enterprise grade security to your Hadoop deployment. We continue to look at security within these four pillars.

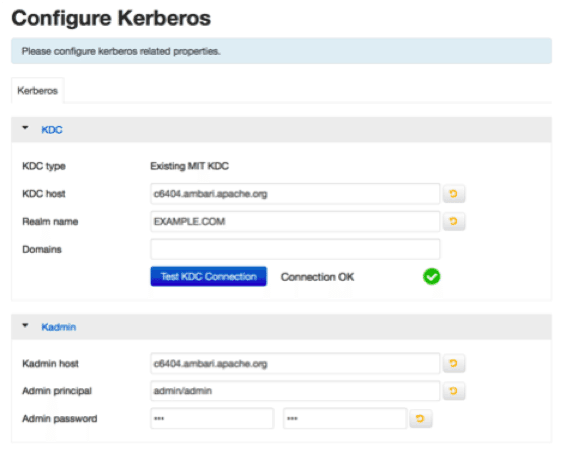

Authentication

Authentication is the first step in security process, ensuring that user is who he or she claims to be. We continue to provide the native authentication capabilities through simple authentication or through Kerberos, already available in the HDP platform. Apache Knox provides a single point of authentication/access for your cluster and integrates with your existing LDAP or Active Directory implementations.

Authorization

Authorization or entitlement is the process of ensuring users have access only to data as per their corporate policies. Hadoop already provides fine-grained authorization via file permissions in HDFS, resource-level access control for YARN and MapReduce, and coarser-grained access control at a service level. HBase provides authorization with ACL on tables and column families, while Accumulo extends this further to cell-level control. Apache Hive provides Grant/Revoke access control on tables.

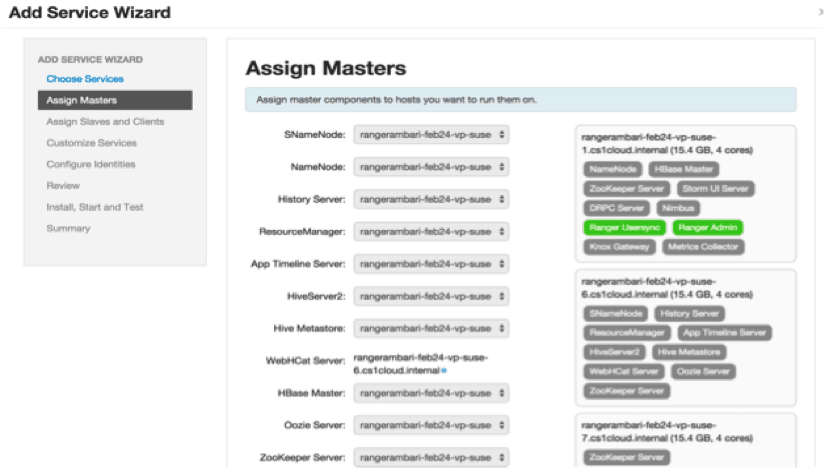

Within the addition of XA Secure, Hadoop now add authorization features that help enterprises securely use varied data with multiple user groups while ensuring proper entitlements. It provide an intuitive way for users to specify entitlements policies for HDFS, HBase, and Hive with a centralized administration interface and extended authorization enforcement. Our goal is to provide a common authorization framework for the HDP platform, providing security administrators with a single administrative console to manage all the authorization policies for HDP components.

Audit & Accountability

One of the cornerstone for any security system is accountability, or having audit data for auditors to control the system and check for regulatory compliance, for example in Healthcare around HIPAA compliance. Healthcare providers would look within audit data for access history for sensitive data such as patient records, and provide the data if requested by patient or any regulatory authority. Having a robust audit data would help enterprises manage their regulatory compliance needs better as well as control the environment proactively. XA Secure provides a centralized framework for collecting access audit history and easy reporting on the data. The data can be filtered based on various parameters. Our goal is to enhance the audit information that is captured within various components within Hadoop and provide insights through the centralized reporting.

Data Protection

Data protection involves protecting data at rest and in motion. Data protection includes encryption and masking. Encryption provides an added layer of security by protecting data when it is transferred and when it is stored (at rest), while masking capabilities enable security administrators to desensitize PII for display or temporary storage. We will continue to leverage the existing capabilities in HDP for encrypting data in flight, while bringing forward partner solutions for encrypting data at rest, data discovery, and data masking.

…and Central Administration

Central Administration of a consistent security policy across all Hadoop data and access methods can be defined. With XA Secure, HDP now provides a security administration console that is unique to HDP but will be delivered completely in the open for all.

Conclusion

Hadoop requires enterprises to look at security in a new way. With the merger of XA Secure and Hortonworks, the combined team will be working together and continue to execute on our joint vision. Currently, the XA Secure adds to the security features within HDP 2.1 with the following functionalities:

- Centralized Security administration

- Fine grained access control over HDFS, HBase and Hive

- Centralized Auditing

- Delegated Administration

What’s Next

In the next series of blogs, we will explore the technical architecture of the XA solution and how the concepts around authorization and auditing work within the different components of the Hadoop ecosystem. We will continue to add more features in the near term, toward the vision to enable comprehensive security within the HDP.

Learn More

- Download the Sandbox with new HDP security and read through security solution tutorials.

- Register for the webinar: Hadoop Security with XA Secure and Hortonworks Data Platform

The post Hortonworks Offers Holistic and Comprehensive Security for Hadoop appeared first on Hortonworks.